Interval Methods and Robust Optimization

by Cassio • 2011/08/28 • Research

Interval methods define representations and operations for computing with intervals. When using intervals, exact quantities are replaced by a range of possibilities, which lets us account for various kinds of errors. These methods are often called interval analysis or interval arithmetic. The latter, however, refers to a specific popular formalism.

While computing with ranges and uncertainties has been done for a long time, most people consider Moore’s Interval Analysis book from the 1960′s to be the seminal work in the field. That’s one of those books everyone cites but very few people read, as numerous modern introductions exist. Wikipedia has a good starting point, as usual.

The original motivation for the introduction of interval arithmetic was to handle rounding errors introducing during numerical computations, as well as measurement errors in the inputs. Regarding the former, it’s interesting to note that Moore’s work predates the IEEE 754 standard for floating point arithmetic by a couple of decades.

It turns out that interval arithmetic is useful for many applications besides error handling. It is useful in computer graphics, robotics, and many other areas. One particular application is relevant to my work: robust optimization.

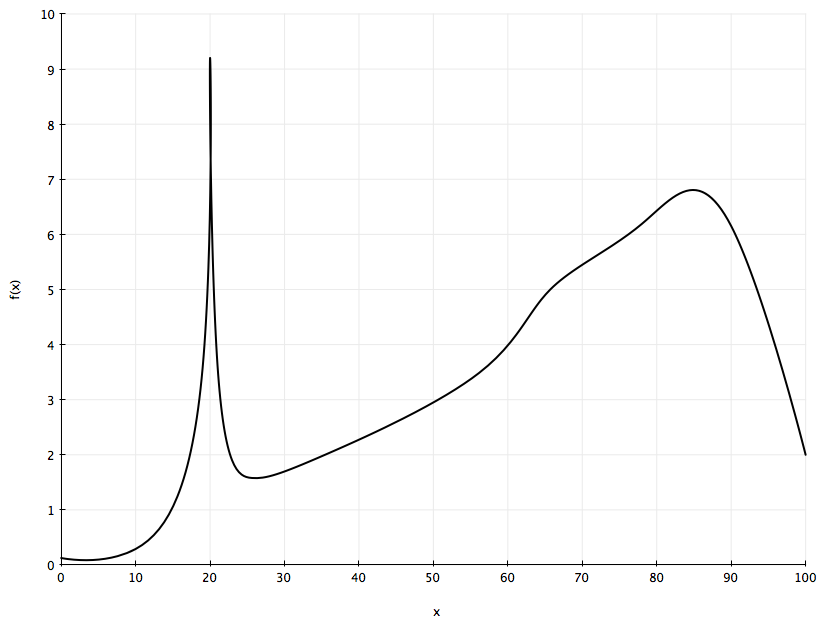

In order to understand robust optimization, let’s take a look at the grossly simplistic function plotted below. Assume we need to find the value of x that maximizes f(x). What’s that value?

It seems pretty obvious, right? In practice, the optimal value depends on how precisely you can measure x. In many real-world scenarios, x is estimated by a noisy process, and that introduces a degree of error. Let’s say that our measurement error is in the [-2, 2] interval, that is, a reading of 50 for x actually means that x could be anywhere from 48 to 52. That would mean there is no single best value for f(x), rather we have a range of values (a interval).

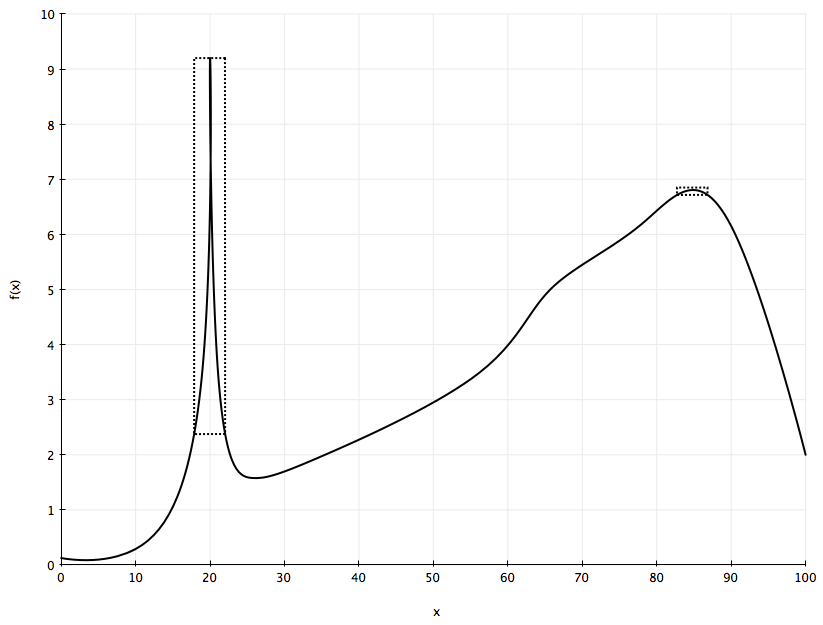

Now, if we want to find the best guaranteed range for f(x), we’re in the field of robust optimization. While there are many ways to define robust optimization, this is a simple and useful one: find the best worst case scenario, or the range of f(x) values with the highest min value (or lowest max value in minimization problems, hence the term min-max optimization). With this definition we see that our desired optimal value changes:

The rectangles in the above chart are made of intervals. In the horizontal axis we have the range of values for x, while the vertical axis corresponds to the resulting range of values for f(x). Using interval arithmetic one can estimate guaranteed bounds for the vertical interval, giving us a worst case scenario value, which is what we’d want to maximize in robust optimization. In this case we’d much rather have x around 85 than x around 20.

There are multiple ways to incorporate intervals into optimization algorithms, the most common and natural one being using it as a filter for a branch and bound method.

In the next post, we’ll look into interval arithmetic in more detail, including some of its shortcomings.